What are A/B tests? According to a popular definition, A/B testing is a method of comparing two design variants (of a contact form, for example) used to achieve the same goal. A/B testing is also sometimes called split testing.

The goal can be any desired action – from the point of view of a business owner operating on the Internet – of a website user.

A/B testing in UX design is a kind of experiment that allows you to check and decide which variant is more effective than other elements and enables you to achieve your goals more efficiently. It's also a convenient tool for a UX designer.

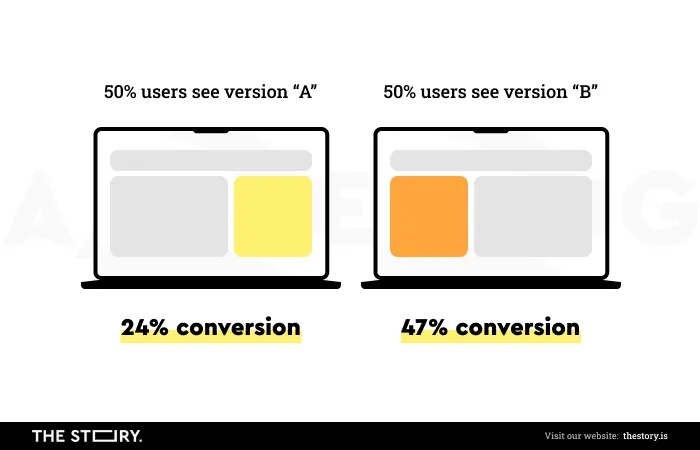

A/B tests involve showing design versions to a target audience. One version to one half of it and the second to the other. These tests aim to reveal which version performs better, meaning which variant users prefer. To determine that designers and analysts use statistical analysis.

Although they have a very long tradition, they gained their full potential and usefulness with the development of the Internet and research tools that make conducting them less of an organizational and budgetary challenge.

Today it's hard to imagine optimizing digital products without this method.

While it's widely used and, at first glance, appears accessible even to laypeople and those without research experience and training, you shouldn't be led astray by the illusion of its ease.

The popular opinion is that even worse than lack of testing is testing conducted in an unreliable, methodologically questionable, and unplanned manner.

It's worth keeping it in mind when deciding to perform an A/B test.

How to perform A/B tests? What are the types of A/B tests? What problems does conducting them create?

What should you pay attention to when performing such experiments?

I will answer the above questions in a moment.

I invite you to read the article!

How to perform an A/B test?

Let's go back to our questions for a moment, what is website A/B testing, and what does UX A/B testing consist of?

Nowadays, A/B testing is a widespread method, mainly in online marketing, but it's no less popular when you want to perform usability testing on a site.

Or when we want to see which variant provides users with a better User Experience.

A/B testing, as one of the most accessible testing methods, should become a permanent tool for any organization that wants to compete effectively and grow dynamically.

It should be important to any organization that cares about offering users, customers digital products that meet their expectations.

Expectations, like experiences, evolve and change over time.

That's why A/B testing should also be used on a planned, regular basis, driven by the adopted optimization strategy and the development of a digital product.

The iterative approach to A/B testing is considered standard today.

A/B tests allow UX designers to determine what makes a particular element of a digital product more user-friendly. It also enables the design team to see how even the smallest changes to a design can impact user behavior.

A/B testing is often associated with another equally popular term: Conversion Rate Optimization (CRO).

Conversion is any action a website user performs that is valuable from the business owner's point of view.

It can be subscribing to a newsletter, sending an inquiry, downloading a file, or clicking on an ad.

A/B testing allows you to achieve your business goals more effectively and positively impacts user experience.

Best of all, they enable you to replace assumptions, ideas, intuitions, and beliefs with hard data that clearly show which solution works more effectively and brings more significant benefits.

Thanks to A/B testing, business decisions are much more sensible. They are based on data as well as measurable and comparable premises. Optimizing the experience with A/B testing also allows you to see the scale of the difference.

The results of A/B testing make it possible to provide much more accurate answers to crucial business questions.

They help you get much better sales results, improve conversion results, and use site traffic more effectively.

For example, regarding key changes to a home page, which should increase the number of conversions and avoid the negative impact of misguided design solutions.

Moreover, instead of saying, "I think I know," A/B testing allows you to state, "I'm pretty sure about this, and I have evidence for it."

Of course, absolute certainty is impossible to achieve, as in all UX research.

Nevertheless, testing provides a sufficient level of certainty to make beneficial – from the point of view of goals and business processes – decisions.

As I've already mentioned, A/B testing may seem like a straightforward method that doesn't require experience and knowledge.

That's a very harmful myth because the reliability, methodological correctness, and how the UX study and usability testing are carried out influence the value of the results you will obtain with their help.

Even worse than a lack of research is a poorly and incorrectly done study because it introduces certainty, a strong belief in the rightness of decisions based on faulty or false premises.

Also, you should remember to collect data regarding the performance of your current design before you start performing A/B testing.

What are the types of A/B tests?

Before we perform a study, we must check the tool with which we will test variants within a website.

For this, you will need A/A tests that compare two identical designs.

A/A Tests

No statistically significant difference in results should appear as an outcome of the comparison.

A/A tests aren't designed to identify the more effective variant but to ensure maximum reliability of the obtained results.

The A/A tests and the results obtained through them also provide a specific reference point against which you will compare the results of the actual tests.

So, if you care about the quality of a study, its reliability, and its usefulness, it is necessary to check the research tool in terms of accuracy.

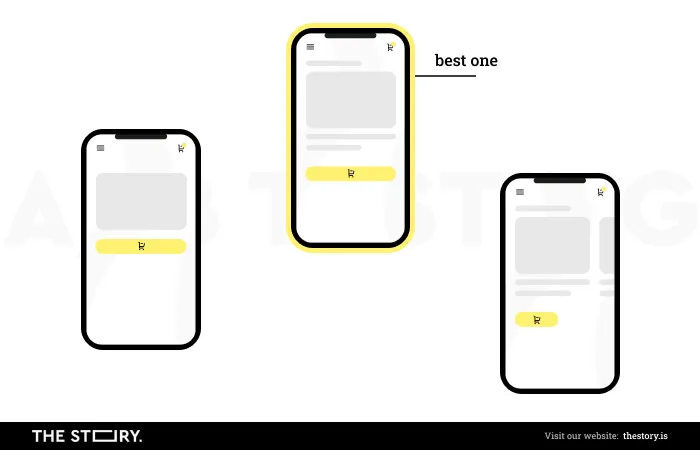

A/B/n Tests

The name A/B tests can be a bit misleading. It suggests that only two design versions can be compared, which isn't true, as A/B/n tests are often performed.

It's possible to compare any number of variants with them.

In A/B/n testing, variant A is the control value, and the other versions are variations of it. The advantage of this method is, of course, convenience and the ability to compare more designs.

Its limitation is time. An extended period is usually required to achieve statistically significant results.

Multivariate Testing – MVT

Multivariate testing can be viewed as an extended version of A/B testing. While the latter is used to compare a single variable (e.g., headline, color of a button), with MVT, it is possible to compare multiple variables simultaneously.

Thanks to multivariate testing, it is possible to experiment with multiple combinations (e.g., user interface, text).

That helps decide which variable configuration is the most efficient and which meets users' interests.

Multivariate testing allows you to check, for example, how an addition, deletion, or edit affects the conversion.

That said, it's important to remember that in multivariate testing, you are comparing, at a minimum, two items, and each item must be in two different versions. Therefore in multivariate testing, you test a total of four versions.

Multivariate testing also poses a significant organizational challenge. Another limitation of this test is the lack of certainty about the reasons for a design's popularity or lack thereof.

It's hard to conclusively and confidently say why a particular combination won.

That is why you should carefully approach the results of multivariate tests, which doesn't mean their cognitive value is low.

Split URL Testing

Split URL testing is often used synonymously with A/B testing, but they're, in fact, two different methods. In A/B tests, two versions of a given website element are compared.

The Split URL test compares two variants of an entire site.

So this is a much more extensive method that allows you to compare, for example, a new website design (version A) with its old version (version B).

Unfortunately, multivariate testing needs a high level of traffic.

On top of that, the more variables that constitute combinations, the more variants need to be tested, making it difficult for even high-traffic sites to generate adequate traffic.

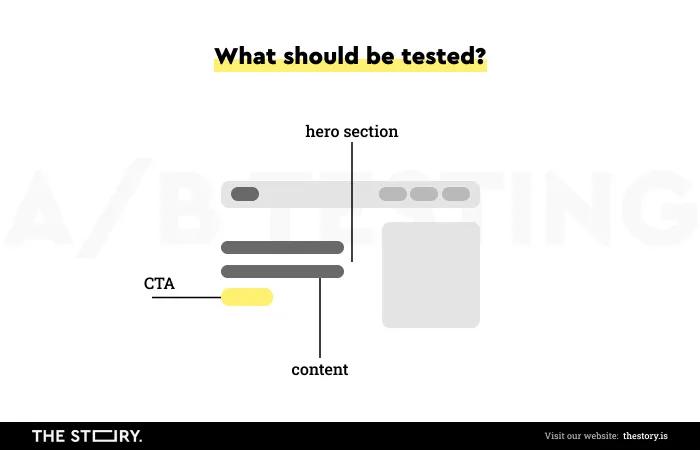

The most popular elements tested using A/B tests

The most common elements tested with A/B testing include the following:

- Headlines

- The content of a website

- The layout of a page

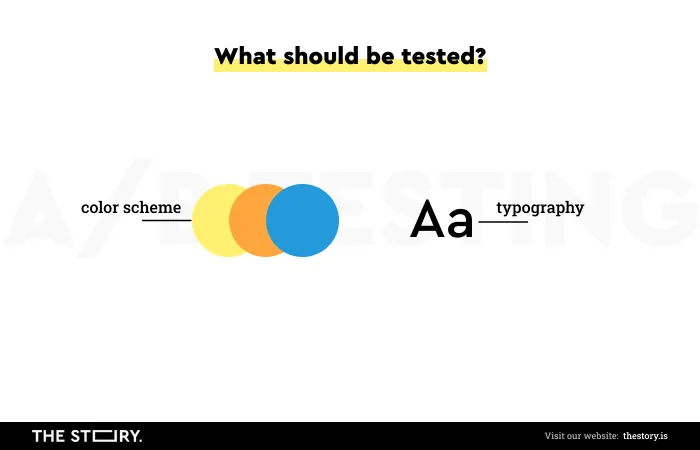

- The color scheme of a site

- Navigation

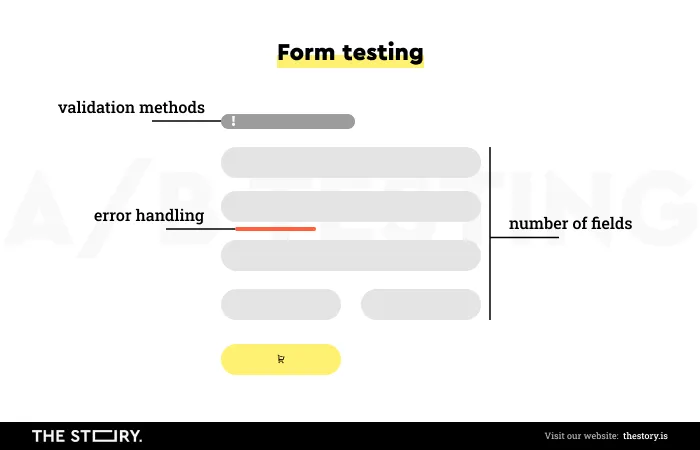

- Forms

- Call-to-action button (CTA)

- Social Proof

- Rating systems.

Headlines are among the most noticeable elements of a site and fulfill an essential function in information structure.

They're responsible for the effect of the first impression, so their length, communicativeness, comprehensibility, concreteness, and unambiguity are a priority.

In addition, headlines should be factual and catchy.

A/B tests make it possible to implement changes and test variants in a stylistic, linguistic, and visual sense (for example, by changing the size, type, color, or typeface).

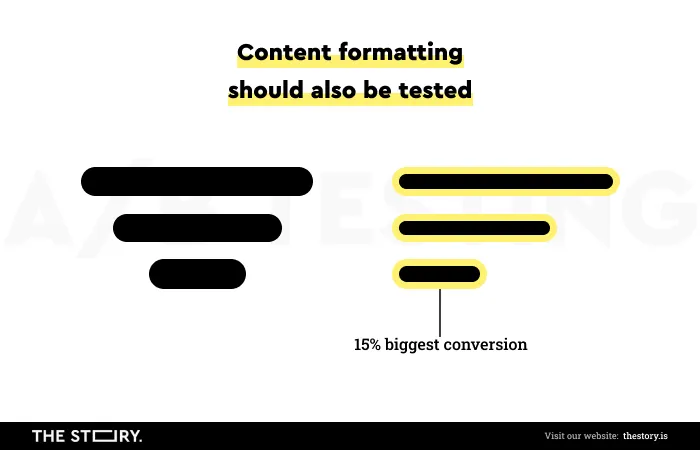

A site's content can also be tested regarding its readability and user-friendliness of content formatting.

The content of a site not only has an informative function but also has an impact on conversion. Therefore the appropriate style of the content and the way it is presented (the layout of headlines, subheadlines, paragraphs, the typography used, bolding, and all caps) is of great importance.

Testing content variants allows you to choose the most beneficial configuration for users and business owners.

Page layout and navigation are other essential elements (responsible for a satisfying User Experience) that can be optimized with the help of A/B testing.

Challenging and disliked page elements, such as forms, can be effectively optimized through comparative testing.

You can test the number of fields in a form, its comprehensibility, validation methods, error handling, and many other issues with form design in terms of their attractiveness and performance, thanks to A/B tests.

Statistics and validity of A/B testing

When performing A/B tests, two statistical approaches are used:

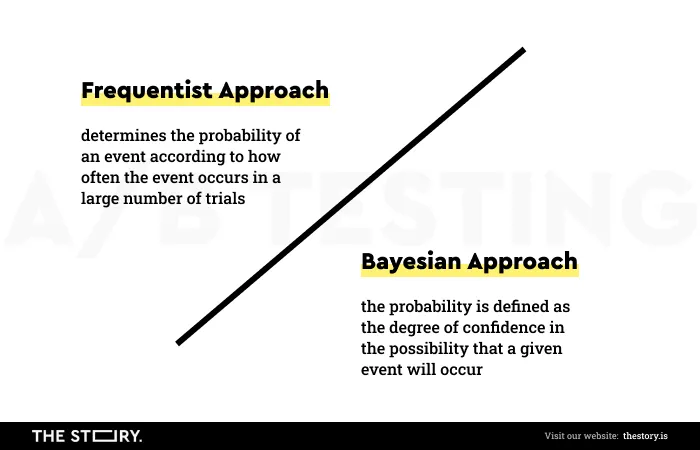

- Frequentist Approach

- Bayesian Approach.

The Frequentist Approach determines the probability of an event according to how often the event occurs in a large number of trials.

In other words, to draw binding, accurate conclusions based on the results, it's necessary to perform tests over a more extended period and on a larger number of users utilizing the given variants.

In this approach, only data from the current experiment is used.

In the Bayesian Approach, probability is defined as the degree of confidence in the possibility that a given event (such as using a contact form) will occur.

The prediction process is determined by the information's scope, depth, diversity, and knowledge formed on it.

Simply put, the more we know about a given event, the more accurately and quickly we can predict the outcome.

The probability in this approach isn't a constant value but a variable that is shaped by the growth of information and knowledge.

Moreover, the Bayesian Approach makes it possible to:

- Use past data (e.g., results obtained in earlier tests)

- Obtain results faster without the risk of unreliability or inaccuracy

- Conduct research in a more time-flexible manner.

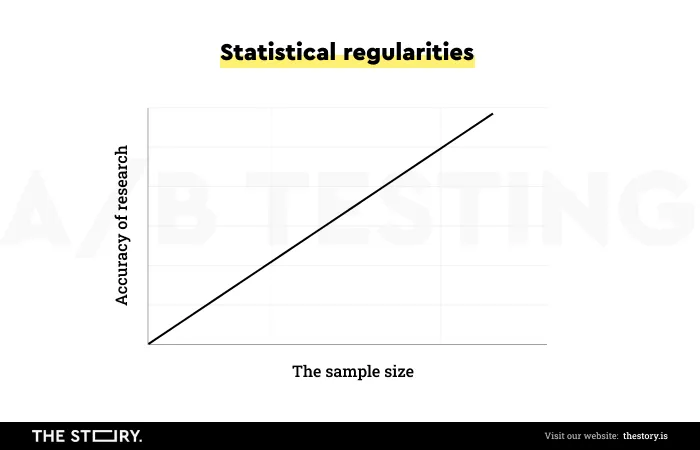

When analyzing the results of A/B tests, it's useful to be aware of several statistical regularities, rules, and relationships.

For example:

- The larger the sample size, the more accurate the average is

- The higher the variability, the less accurate the average will be in predicting a single data point

- The lower the level of statistical significance, the greater the probability that the winning variant may actually not be one

- The more extreme the results in the first measurement, the more likely the results will be closer to the average in the second measurement.

The methodology of conducting the A/B test

Meticulous adherence to the procedures recommended for A/B tests is not so much advisable but simply necessary to obtain reliable and useful results in design and business.

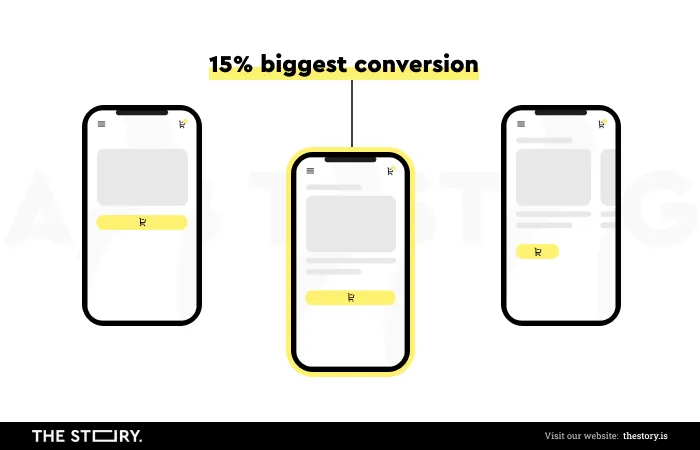

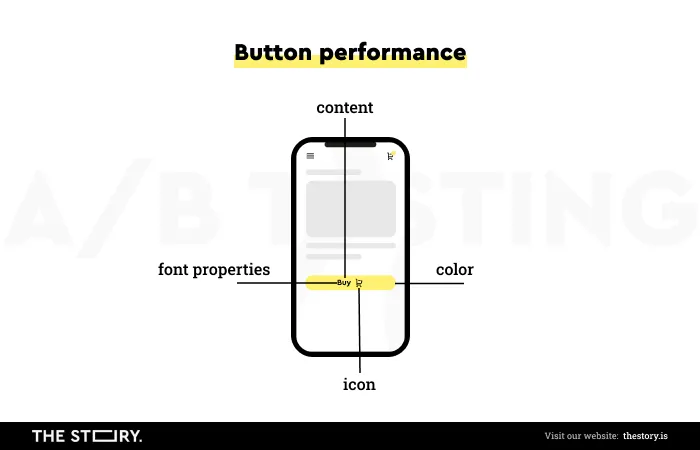

Let's look at an example. Suppose we want to study the effectiveness of a button, for example, the "Add to cart" button, which current results are unsatisfactory.

Precisely identifying the research problem and defining what we want to study with what metrics is crucial.

In this example, we will measure the button's performance by manipulating only one variable, for example, the color scheme, font size, or content of the call to action.

It isn't a matter of generating ideas for a study but posing a specific hypothesis that can be falsified or confirmed.

For instance: changing the color from red to yellow increases the conversion.

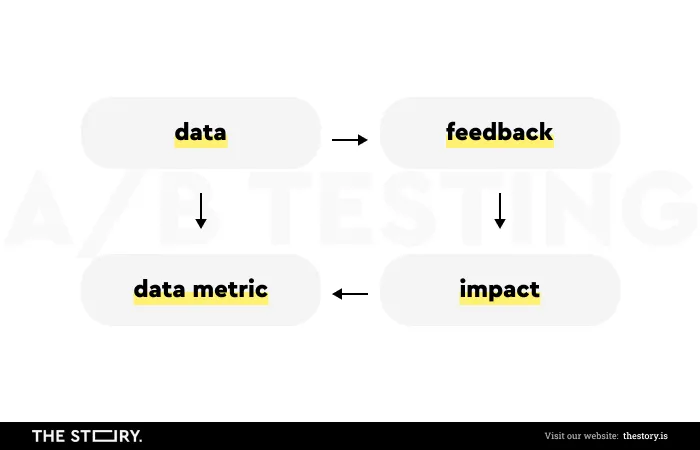

In formulating hypotheses, the template proposed by Craig Sullivan in his article "Hypothesis Kit 3" is very helpful.

It looks like this:

- Because we observe the Data/we have Feedback

- We can expect the Change to cause an Impact

- Which we can measure with Data Metrics.

The number of button clicks in a given variant will be a performance indicator and will be your metric.

The more people click on the button in a given time frame, the higher the button's performance will be compared to the other variants. In the next step, you need to specify how long you want the test to last. Its length should be determined based on past site performance and expected traffic.

It's worth remembering that the traffic will be divided into two variants, so you should consider this in the made assumptions.

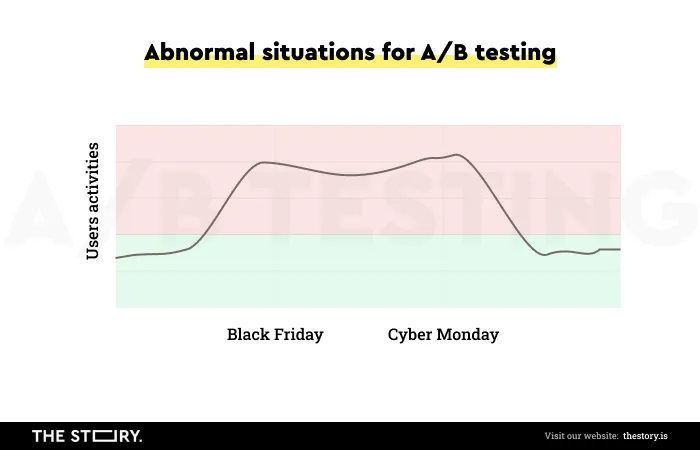

An issue that cannot be ignored is the problem of external factors, abnormal situations, or situations that deviate from the common norm, which can affect performance.

It's about the moment in which traffic is increased compared to the norm, for example, during:

- Promotional period (e.g., Black Friday, Cyber Monday).

- Advertising activities

- Different seasons – especially relevant in industries where seasonality is a significant variable.

In a statistical sense, the above situations are an anomaly and may result in faulty results. A variant that is more effective during special periods at other times may be less effective.

It's also essential to consider the traffic source (Mobile vs. Desktop), as the channel in which the interaction occurs is a variable that conditions the results.

The tests must be performed in identical conditions and contexts to be reliable and comparable.

On the grounds of statistical theory for designing experiments, such a practice is called Blocking.

Blocking reduces unexplained variability and helps produce more precise results.

Depending on the needs and research objectives, adopted hypotheses. It is possible to segment users in more detail, also in terms of other variables (e.g., location).

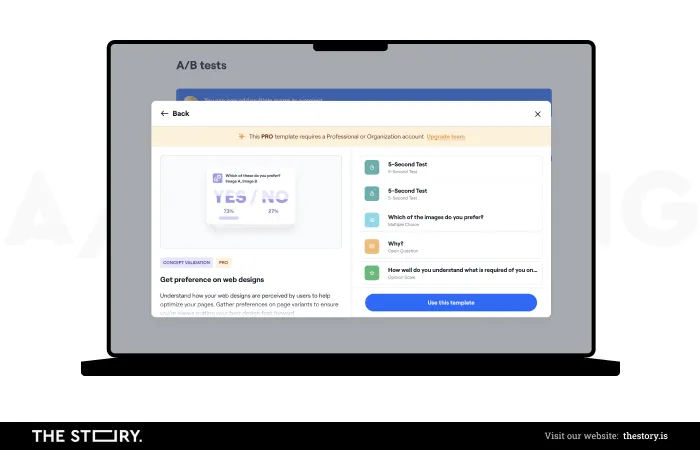

When you've prepared the test and the variants created based on the accepted hypothesis or hypotheses, which will be compared with the control version, you can run the test on one of the numerous tools available for conducting A/B tests.

You can find many different tools on the market. Google Optimize is one of the most popular tools for conducting A/B tests. Google Optimize in the basic version allows you to run tests at no cost. You can also take a look at Visual Website Optimizer, Poll the People, or Optimizely.

Common mistakes made during A/B testing

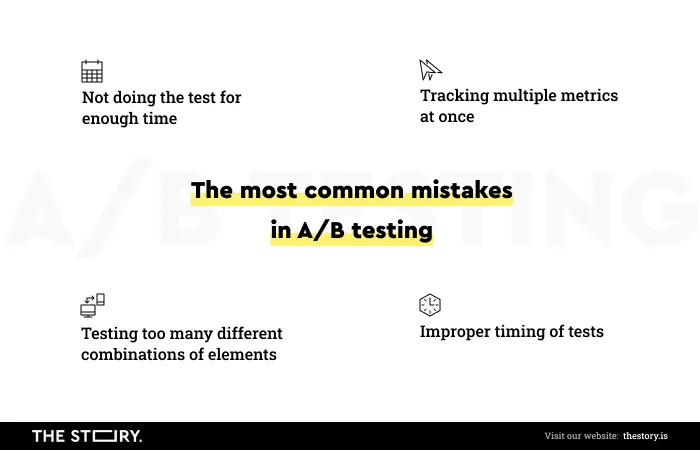

One of the most common mistakes made when performing A/B tests (e.g., when testing a landing page) is to end them prematurely.

The temptation to do so stems from the results that support the research hypothesis. The hypothesis seems correct, and further testing looks like a waste of valuable time.

This kind of error is called P-hacking or Questionable Research Practices (QRP) in the literature on this subject.

They're committed by researchers who violate the assumptions of statistical inference to confirm their research hypotheses.

It's crucial for the reliability of the tests that they are performed over a sufficient amount of time so that you can have time to collect enough data based on which you will make further design decisions.

An equally common mistake is choosing not to repeat studies. The risk of a false result can never be ignored; thus, repeat testing is simply necessary and highly advisable.

It's important to remember that user preferences evolve and change over time, so diagnosing them is highly recommended.

The length of the tests and the sample size should be adjusted each time according to the research problem, the hypothesis, the volume of traffic, and the period in which the study is to be conducted.

The number of variants, general hypotheses, and detailed hypotheses also translates into study duration and sample size, which helps to achieve statistical significance.

The quality of the traffic itself and its balance significantly impact the quality of test results.

How to perform A/B tests? Summary

- What are A/B tests? A/B testing is a method of two design variants used to achieve the same goal.

- With the help of the A/B test, you will determine which variant is more effective and allows you to achieve your goals more efficiently.

- A/B UX testing is a method used to test the usability of a website, web page, or other separate elements.

- It's recommended to use A/B testing on a regular and recurring basis.

- It's a research method that increases the effectiveness, attractiveness, friendliness, and usability of a site as such and individual elements on a page, such as ads.

- A/B UX tests enable you to improve your conversion rate (CRO – Conversion Rate Optimization).

- In general, A/B testing provides you with all sorts of valuable data.

- When you optimize a website, A/B teats allow you to replace assumptions, ideas, intuitions, and beliefs with hard data, providing much more accurate answers to crucial business questions (e.g., how to increase the conversion and get a higher CTR?).

- They also help achieve much better sales results and make more effective use of site traffic.

- The reliability, methodological correctness of testing, and the way the whole process is carried out influence the value of the results that will be obtained with their help.

- A/A tests are designed to ensure maximum reliability of the obtained results.

- A/B/n tests make it possible to compare any number of variants.

- Multivariate tests enable you to compare different elements of a page, different versions simultaneously, and possible combinations (for example, they allow you to compare product page variants, which is especially important if you run an online store).

- The Split URL test compares two variants of an entire site.

- With the help of A/B testing, headlines, content, page layout, color schemes, and navigation are most often tested. They're often used to optimize conversions and landing pages and implement more effective functionalities to a site.

- When conducting an A/B test, two statistical approaches are used Frequentist Approach and Bayesian Approach.

- Methodological guidelines must be followed to obtain reliable and useful results in design and business, supporting landing pages and their development.

- You should adjust the length of the tests and the sample size each time according to the research problem, the hypothesis, the volume of traffic, and the period (they shouldn't be performed during a period of high anomaly).